넷프로 NETPRO

자유게시판

서브게시판내용

GitHub - Deepseek-ai/DeepSeek-Coder: DeepSeek Coder: let the Code Writ…

서브게시판정보

작성자 Robby 댓글0건 25-03-15 12:38관련링크

본문

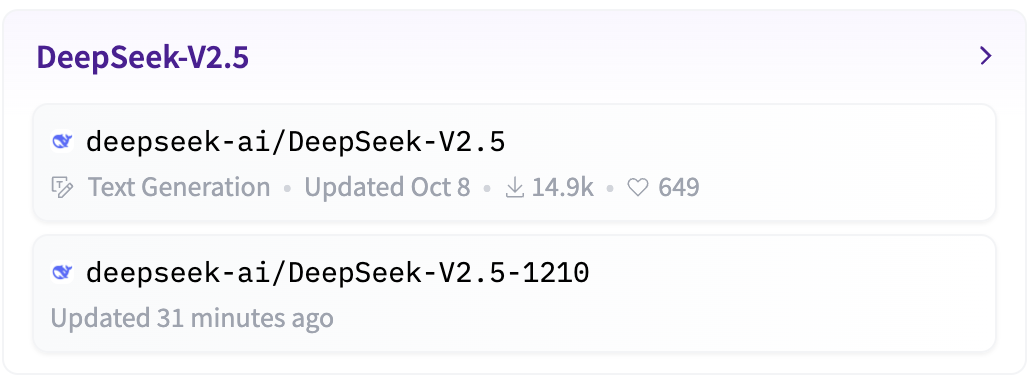

However, there isn't any indication that DeepSeek will face a ban in the US. Will DeepSeek Get Banned Within the US? Users can select the "DeepThink" feature before submitting a question to get results using Deepseek-R1’s reasoning capabilities. To get began with the DeepSeek API, you'll must register on the DeepSeek Platform and get hold of an API key. Actually, it beats out OpenAI in each key benchmarks. Below, we highlight efficiency benchmarks for each model and show how they stack up towards one another in key classes: arithmetic, coding, and basic data. One noticeable distinction in the models is their common information strengths. Breakthrough in open-supply AI: DeepSeek, a Chinese AI company, has launched DeepSeek-V2.5, a strong new open-source language model that combines common language processing and superior coding capabilities. While export controls may have some unfavorable uncomfortable side effects, the overall affect has been slowing China’s ability to scale up AI usually, as well as specific capabilities that initially motivated the policy around army use. 1. Follow the instructions to change the nodes and parameters or add extra APIs from different services, as each template may require specific changes to suit your use case.

However, there isn't any indication that DeepSeek will face a ban in the US. Will DeepSeek Get Banned Within the US? Users can select the "DeepThink" feature before submitting a question to get results using Deepseek-R1’s reasoning capabilities. To get began with the DeepSeek API, you'll must register on the DeepSeek Platform and get hold of an API key. Actually, it beats out OpenAI in each key benchmarks. Below, we highlight efficiency benchmarks for each model and show how they stack up towards one another in key classes: arithmetic, coding, and basic data. One noticeable distinction in the models is their common information strengths. Breakthrough in open-supply AI: DeepSeek, a Chinese AI company, has launched DeepSeek-V2.5, a strong new open-source language model that combines common language processing and superior coding capabilities. While export controls may have some unfavorable uncomfortable side effects, the overall affect has been slowing China’s ability to scale up AI usually, as well as specific capabilities that initially motivated the policy around army use. 1. Follow the instructions to change the nodes and parameters or add extra APIs from different services, as each template may require specific changes to suit your use case.

Yes, this will assist within the quick time period - again, DeepSeek can be even more effective with extra computing - but in the long run it merely sews the seeds for competition in an trade - chips and semiconductor equipment - over which the U.S. Organizations that make the most of this mannequin acquire a significant advantage by staying forward of trade traits and meeting customer calls for. That is an important query for the development of China’s AI industry. Because the TikTok ban looms within the United States, this is at all times a question price asking about a new Chinese company. Early testing released by DeepSeek suggests that its quality rivals that of different AI merchandise, whereas the company says it costs much less and uses far fewer specialized chips than do its competitors. Only by comprehensively testing models towards real-world scenarios, users can identify potential limitations and areas for enchancment before the solution is live in production. Reasoning data was generated by "knowledgeable fashions". On AIME 2024, it scores 79.8%, slightly above OpenAI o1-1217's 79.2%. This evaluates advanced multistep mathematical reasoning. For SWE-bench Verified, DeepSeek-R1 scores 49.2%, barely ahead of OpenAI o1-1217's 48.9%. This benchmark focuses on software program engineering duties and verification. On GPQA Diamond, OpenAI o1-1217 leads with 75.7%, while DeepSeek-R1 scores 71.5%. This measures the model’s ability to answer normal-goal knowledge questions.

Yes, this will assist within the quick time period - again, DeepSeek can be even more effective with extra computing - but in the long run it merely sews the seeds for competition in an trade - chips and semiconductor equipment - over which the U.S. Organizations that make the most of this mannequin acquire a significant advantage by staying forward of trade traits and meeting customer calls for. That is an important query for the development of China’s AI industry. Because the TikTok ban looms within the United States, this is at all times a question price asking about a new Chinese company. Early testing released by DeepSeek suggests that its quality rivals that of different AI merchandise, whereas the company says it costs much less and uses far fewer specialized chips than do its competitors. Only by comprehensively testing models towards real-world scenarios, users can identify potential limitations and areas for enchancment before the solution is live in production. Reasoning data was generated by "knowledgeable fashions". On AIME 2024, it scores 79.8%, slightly above OpenAI o1-1217's 79.2%. This evaluates advanced multistep mathematical reasoning. For SWE-bench Verified, DeepSeek-R1 scores 49.2%, barely ahead of OpenAI o1-1217's 48.9%. This benchmark focuses on software program engineering duties and verification. On GPQA Diamond, OpenAI o1-1217 leads with 75.7%, while DeepSeek-R1 scores 71.5%. This measures the model’s ability to answer normal-goal knowledge questions.

On Codeforces, OpenAI o1-1217 leads with 96.6%, whereas DeepSeek v3-R1 achieves 96.3%. This benchmark evaluates coding and algorithmic reasoning capabilities. Both fashions reveal sturdy coding capabilities. The increasingly more jailbreak research I learn, the more I believe it’s principally going to be a cat and mouse sport between smarter hacks and fashions getting smart sufficient to know they’re being hacked - and proper now, for this type of hack, the models have the benefit. It was educated on 87% code and 13% natural language, providing free open-source entry for analysis and industrial use. But frankly, lots of the research is published anyways. They do lots much less for publish-coaching alignment right here than they do for Deepseek LLM. But wait, the mass right here is given in grams, proper? However, selling on Amazon can still be a highly profitable venture for individuals who strategy it with the best methods and instruments. This strategy offers a clear view of how the mannequin evolves over time, particularly in terms of its capacity to handle complicated reasoning tasks. Imagine that the AI model is the engine; the chatbot you employ to talk to it's the automotive constructed round that engine. For detailed instructions on how to make use of the API, including authentication, making requests, and handling responses, you may discuss with DeepSeek's API documentation.

DeepSeek provides programmatic access to its R1 model via an API that permits builders to combine superior AI capabilities into their applications. DeepSeek-Coder-V2 expanded the capabilities of the unique coding mannequin. In response to the experiences, DeepSeek's value to train its newest R1 mannequin was simply $5.58 million. Their newest mannequin, DeepSeek-R1, is open-supply and thought of probably the most superior. DeepSeek Chat Coder was the corporate's first AI model, designed for coding duties. DeepSeek-R1 reveals sturdy performance in mathematical reasoning tasks. This reinforcement studying allows the mannequin to be taught on its own through trial and error, much like how you can study to trip a bike or perform sure tasks. I take accountability. I stand by the submit, together with the 2 largest takeaways that I highlighted (emergent chain-of-thought via pure reinforcement studying, and the ability of distillation), and I discussed the low price (which I expanded on in Sharp Tech) and chip ban implications, but these observations were too localized to the current state-of-the-art in AI.

Warning: Use of undefined constant php - assumed 'php' (this will throw an Error in a future version of PHP) in /home/comp_interior01/public_html/theme/company_interior/skin/board/common/view.skin.php on line 135

댓글목록

등록된 댓글이 없습니다.